Just as with hiring assessment, transparency and clarity around assessments during the apprenticeship are critical! And not just for apprentices. Managers and mentors must be aligned. When misalignment around expectations occurs, conversion goals can be jeopardized.

Establish an evaluation framework for conducting performance assessments

Check out these tips for developing and implementing an evaluation framework/rubric for apprentices throughout their learning journey. In some cases, your company may already have a performance evaluation framework in place that will be helpful to align with. Leveraging those existing frameworks and adjusting for apprentice-specific expectations will make stakeholder alignment much easier than starting from scratch. The goal in aligning around a framework is to reduce subjectiveness in evaluation. This boosts equity for all apprentices, and particularly minimizes bias in evaluating apprentices from underrepresented racial groups or from alternative education backgrounds.

Revisit technical & non-technical skill requirements for conversion

In designing the program and hiring assessment, you already thought through this. This is a chance to revisit and hone those requirements and ensure your evaluation rubric links directly to them.

Questions to Ask:

- How might we provide clarity and transparency around the technical and non-technical skills an apprentice is expected to attain in order to achieve conversion?

- How can we give apprentices a clear picture of what’s expected of their performance at each phase of the apprenticeship?

Tips:

- Technical skill requirement examples: Successfully performing code testing, executing code reviews (giving & receiving), producing high quality code, documenting code etc.

- Non-technical skill requirement examples: strong collaboration, ability to take feedback, growth mindset

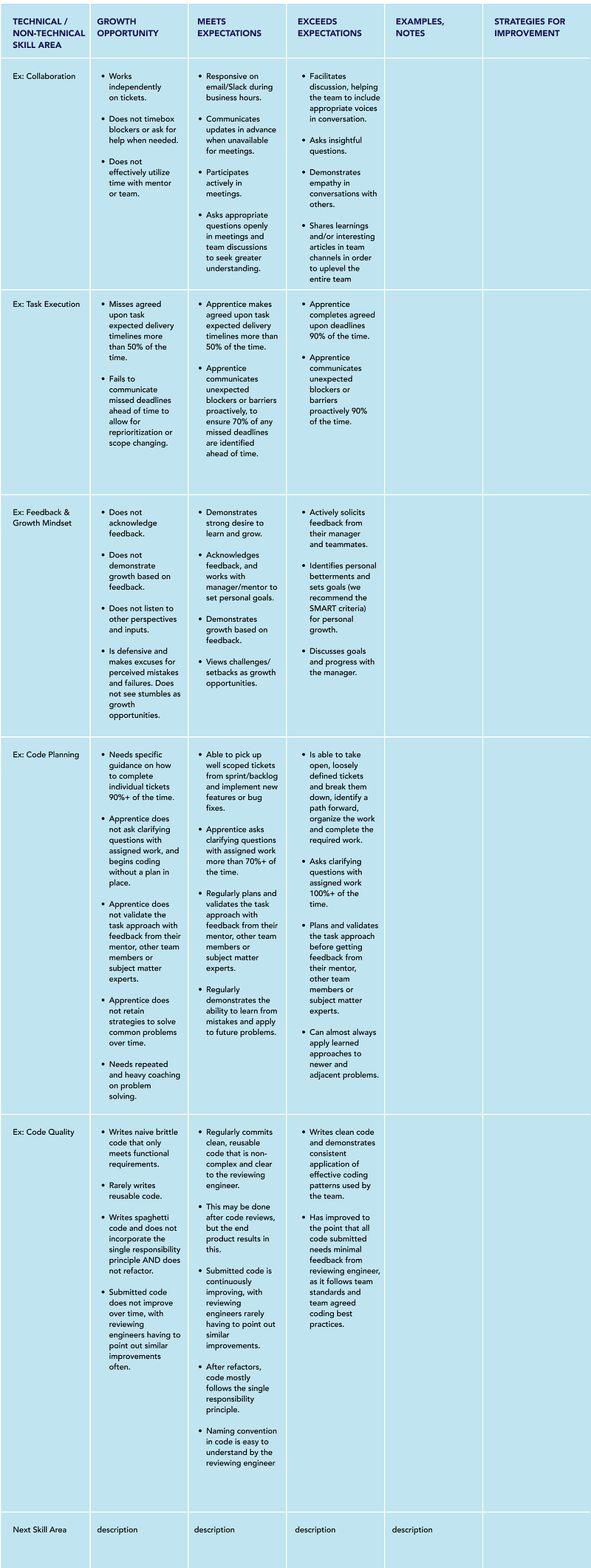

2) Develop evaluation metrics for each skill requirement

Here you will take each of the skill requirements and develop a clear, specific success metric to help avoid any confusion, subjectivity, or bias in evaluation of apprentices.

Questions to Ask:

- How can we be very specific in defining success metrics under each skill area?

- How can we reduce potential bias or preconceived notions from the evaluation process?

- How can we tie apprentice learning to what is expected within the company’s career level framework at the level they’ll convert at?

Tips:

- Under each skill area, designate at least three levels of performance success (growth opportunity, meets expectations, and exceeds expectations)

- Define criteria for each of those performance levels in a specific, measurable way that ladders up

- Do not rely on numerical scoring. This risks skewing results when evaluators over-index on a specific area or incorrect performance criteria

- The evaluator will highlight the performance level the apprentice fall into for each skill area

- Make space for evaluator to leave notes about concrete, measurable examples of the apprentice’s performance, as well as concrete strategies for improvement

- Train apprentices and their relevant evaluators (instructors/cohort leads, technical managers, mentors) how to use it

- Example below!

TOOL: Apprentice Evaluation Rubric

Instructions for Use: (Bullet out instructions for use here):

____________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________________

3) Adapt use of rubric to the apprenticeship program phase

How you use the evaluation rubric with apprentices and their evaluators will change as the program progresses across multiple evaluation intervals. The purpose of this feedback is to give apprentices an opportunity to gut check where they are in their technical and non-technical skill development and help them understand what to continue doing well in addition to where they need to course correct to be on track towards conversion. One example of evaluation sequencing could be (1) at the foundational learning phase, (2) after joining formal technical teams, and (3) at program end for conversion. Programs vary in structure, so this sequencing can be tailored.

Foundational learning phase: Apprentices get onto the same page in terms of skill level, learn the company’s technical stacks, and understand the “unstated rules” of working in the company environment in advance of joining a formal team.

Tips:

- Curriculum instructors/cohort leads should focus their evaluation more around soft skills, such as knowing how to ask for help and problem solve, than technical skills, which can be honed later

- Along these lines, “Meets Expectations” is more expected for non-technical skill areas, whereas “Growth Area” is more expected for technical skill categories in this phase

- Emerging from this phase, apprentices should have a clear sense of what skills they need to continue to develop when they join their technical teams.

- Apprentices can be encouraged to share their performance feedback with their future manager or team mentor if they are comfortable. But the manager or team mentor do not need to be made aware of the apprentice’s performance from the earlier foundational learning phase. This helps avoid confirmation bias and preconceived notions of areas of weakness.

- Evaluations should include the apprentice cohort lead/curriculum instructor plus the apprentice’s future technical team manager and mentor

After joining technical teams: Apprentices join formal technical teams and receive feedback from their technical mentor and manager on both technical and non-technical skills demonstrated in project work.

Tips:

- Technical mentors work most closely to help apprentices navigate learning expectations and understand how their performance can ladder to conversion via bi-weekly feedback sessions that link to the rubric

- A formal evaluation should also take place during this phase that involves not just the technical mentor, but also the technical manager responsible for hiring decisions, and the program curriculum instructor/cohort lead

- The timing of this formal evaluation must leave enough time for apprentices to respond to feedback before the end of program when conversion decisions are made

- Skill areas assessed as “Growth Opportunity” in this phase should have a clear path towards growth or improvement plan to ensure conversion at program end

- Technical mentors should keep senior engineering managers up to speed on the apprentice’s status to avoid miscommunications or surprises come conversion time

End of Program/Conversion Evaluation: At the end of the program, all feedback is added up and weighed as to whether the apprentice will convert into a full time role on a company team.

Tips:

- The final evaluation is made by the technical manager who leads the team on which the apprentice would be hired onto.

- This final evaluation should also involve the technical mentor, and the program instructor/cohort lead who have worked more closely with the apprentice.

- At this phase, only a minority of skill areas should be marked as “Growth Opportunity”

- A majority of skill areas should be marked “Meets Expectation”

- There may be a few skill areas marked “Exceeds Expectation,” but this should not be a requirement for conversion.

- The apprentice should now be at or near the earliest technical level in the company’s “career level framework.”

— — — — — — — — — — — — — — — —

Tool and best practices co-designed with champions in tech Jacqui Watts and Kevin Berry.

Have questions or comments about the Equitable Tech Apprenticeship Equity Toolkit? Send us a note.